Faulty student discipline numbers for at least three Colorado school districts have muddied efforts to track suspension and expulsion trends, complicated advocates’ plans to press for change and caused state officials in one case to take the unusual step of correcting wrong data.

In early August, the advocacy group Padres & Jovenes Unidos, relying on numbers school districts provide to the state, reported surprising news: The rate of out-of-school suspensions in Colorado schools had shot up by 19 percent in the 2014-15 school year after years of decline.

However, three school districts portrayed as largely responsible for the spike all said their data were wrong, turning what had been a big jump in the suspension rate into a small one.

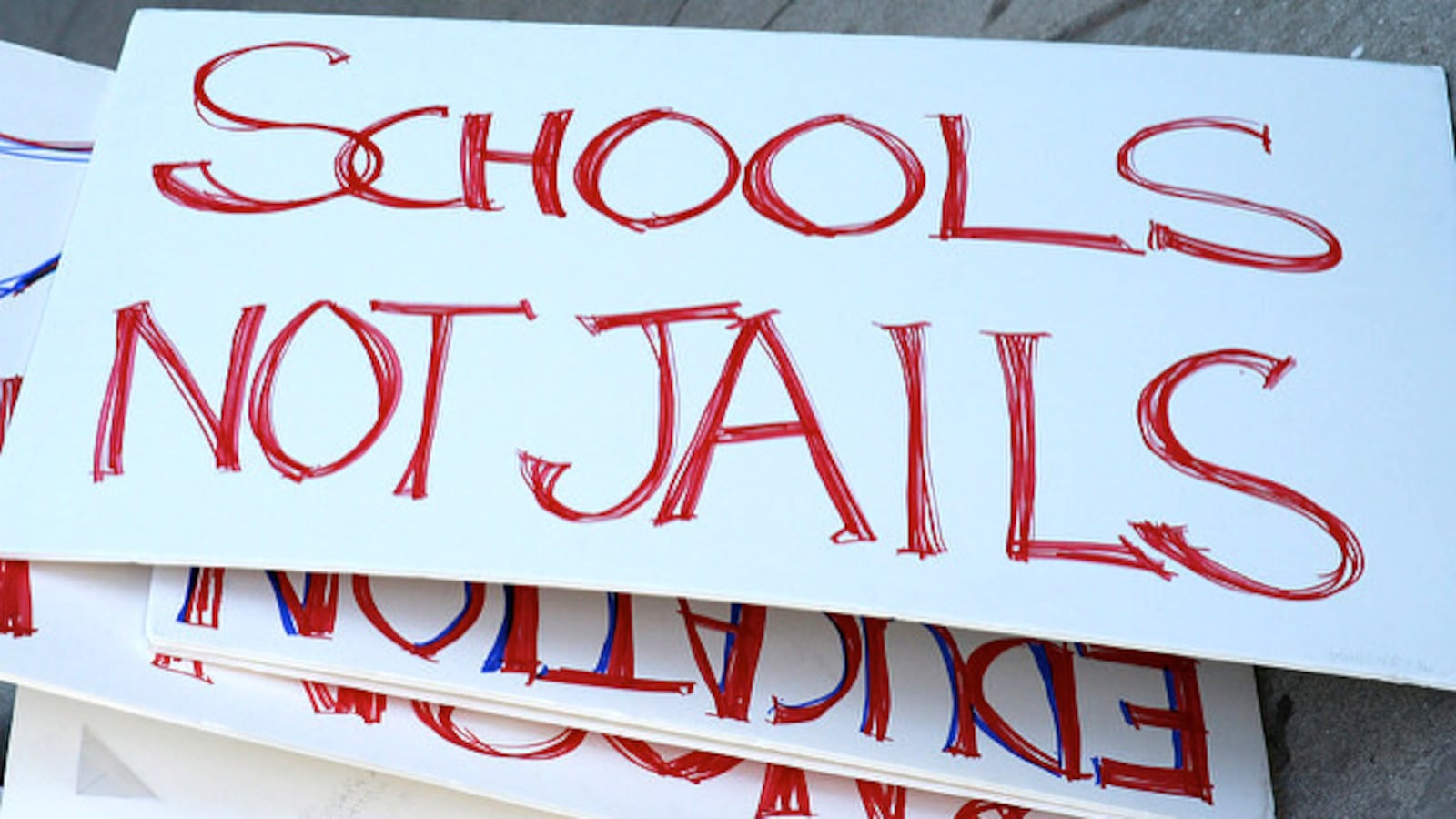

Getting school discipline data right is critical because advocacy groups campaigning to end the “school-to-prison pipeline” rely on the numbers to define the problem and push for changes in state law. Bad numbers can equal bad policy.

“We’re really, really invested in the accuracy of these numbers,” said Daniel Kim, director of youth organizing for Padres, which is widely known for its efforts to prevent harsh school discipline.

“These aren’t just numbers. These are students’ lives. These are the conditions they walk into every day at school. This is the environment of their school. That’s what these numbers reflect.”

Kim said the group plans to add a notation to its report, making clear that the data discrepancies could affect its findings.

The report attributed the 19 percent spike to big increases in the number of out-of-school suspensions in the Adams 12 Five Star, Colorado Springs 11 and Pueblo City 60 districts.

But shortly after the report’s release, Adams 12 came forward to dispute the findings. Officials said staff mistakes resulted in a report to the state that inflated its 2014-15 out-of-school suspension numbers by thousands and failed to include more than 100 expulsions.

After inquiries from Chalkbeat, Colorado Springs and Pueblo said their data was also wrong but in a different way. Out-of-school suspension numbers in both districts had been significantly under-reported to the state in 2013-14.

Taking into account numbers the districts say are correct, Chalkbeat calculated that the out-of-school suspension rate would have increased by just 2 percent in 2014-15. Similarly, the 7 percent suspension rate decline reported in Padres’ previous discipline report would have been around 1 percent had correct numbers been used for Colorado Springs and Pueblo.

National experts say inaccuracies in discipline data aren’t unique to Colorado and reflect growing pains in the push to elevate school discipline to the same high-profile status as academic achievement.

“This sloppiness wouldn’t be tolerated if it was test scores,” said Daniel Losen, director of the Center for Civil Rights Remedies at the University of California, Los Angeles.

“The more people start looking at school discipline…the more accurate the reporting will get.”

Already, he said, the spotlight on school climate and harsh discipline tactics is brighter because of new provisions in the recently reauthorized federal education law.

There’s also been a growing recognition that suspension and expulsion disproportionately affect students of color and can have devastating lifelong consequences—increasing the risk that kids will drop out and end up incarcerated.

Losen doesn’t blame advocacy groups like Padres for discipline data errors.

“It falls on the district,” he said. “This is the information age. There are so many ways in which we are good at tracking information about people and their experiences….It’s a little discouraging that schools are so slow in this regard.”

Genesis of a problem

Months before the Padres report came out, there were whispers among some of Colorado’s education insiders about sketchy discipline data.

The Colorado Children’s Campaign, spearheading an effort to reduce suspension and expulsion among young children, asked the state for data on suspension rates in kindergarten through third grade. The numbers that came back showed that Colorado’s public schools had suspended 7,433 students in those grades during 2014-15—with black and Latino children disproportionately affected.

When the Children’s Campaign circulated the aggregate data among key education groups, a few school district lobbyists and officials challenged its accuracy.

“We got a lot of, ‘Can this be right?’ sort of stuff,” said Bill Jaeger, vice president of early childhood initiatives and interim president at the Children’s Campaign.

Jaeger said that while discrepancies in one or two districts probably wouldn’t have affected the big-picture findings, concern about accuracy was one reason the group didn’t publish the numbers on its website or in its signature “Kids Count” report.

“The data question is something we want to get right absolutely,” Jaeger said.

The Children’s Campaign and other advocacy groups had hoped to see legislation on early childhood discipline during the 2016 session, but it never happened.

“There were a lot of folks who didn’t want to come to the table for a variety of reasons,” said Rep. Susan Lontine, D-Denver, who’s been actively involved in discussions on early childhood discipline.

“Some of it was they didn’t think the data was accurate, but there were other reasons, as well.”

Some of those reasons include disagreement about the best way to handle early childhood suspensions and expulsions in Colorado and the fact that preschool discipline data isn’t currently available from any state agency—leaving a big gap in what advocates know about the problem.

Why so much trouble with the data?

The school districts with problematic data cite various reasons, from self-inflicted “uploading errors” to state-level “coding changes.”

In Adams 12, officials submitted the incorrect data to the state in the summer of 2015. Eight months later—around the time the Children’s Campaign was passing around its K-3 data—officials there realized they’d made two major mistakes.

Not only had they reported nearly 9,000 out-of-school suspensions for 2014-15 instead of the actual 3,585, they’d reported expelling no students, when 121 had received that punishment.

They’d managed to look both better and worse than they actually were.

In an attempt to determine the extent of the data reporting problems, Chalkbeat contacted nine other districts, including four others singled out for having big increases in out-of-school suspensions, and the state’s five largest districts, four of which reported suspension declines.

Jeffco, Aurora, Douglas County, Cherry Creek, Greeley-Evans and Harrison said their numbers were correct. Denver didn’t confirm either way. Pueblo and Colorado Springs said they also spotted errors in the numbers that were sent to the state.

Those errors, along with the over-reporting error in Adams 12, made all three districts appear to have far larger year-over-year suspension increases than they really did.

Pueblo 60 spokesman Dalton Sprouse said administrators hadn’t gone back and looked at the erroneous discipline data until Chalkbeat asked about it. He said he’s not sure how the mistake, which resulted in a report of 1,161 suspensions instead of 2,264, occurred.

Colorado Springs 11 spokeswoman Devra Ashby attributed the mistake to a coding change at the state level. She said that in 2013-14, the district was asked to submit the total number of students suspended, not the total number of suspensions, which led to students with multiple suspensions only being counted once. As a result, the district reported 905 suspensions instead of more than 3,200. The next year, Ashby said, the district was asked to submit more detailed data.

But Jeremy Meyer, spokesman for the Colorado Department of Education, said the state has always asked districts to submit the same data year after year.

Of the three districts that cited problems with their discipline numbers, only Adams 12 asked the state to correct the data. This was in February, eight months after the data was first submitted.

The state initially refused, saying it was too late for corrections.

But last week, it reversed course. Meyer said fixes will be made to Adams 12’s numbers on the department’s website and reports submitted to the federal government.

Meyer said the decision represents a rare exception and doesn’t signify a change to the department’s policy not correct mistakes after districts officially submit their data.

That’s largely because the correction process is laborious—requiring a series of time-consuming steps at both the district and state level. Meyer said state officials decided to make the change for Adams 12 partly because the discrepancy was so large.

That doesn’t mean the changes will appear on every website or in every publication that published the original numbers.

“There’s a point when you release this information when it takes on a life of its own and it’s difficult to reel it back in,” Meyer said. “We don’t know who’s using it in what report.”