A compromise in a long-running debate over how to evaluate schools is gaining traction as states rewrite their accountability systems. But experts say it could come with familiar drawbacks — especially in fairly accounting for the challenges poor students face.

Under No Child Left Behind, schools were judged by the share of students deemed proficient in math and reading. The new federal education law, ESSA, gives states new flexibility to consider students’ academic growth, too.

This is an approach that some advocates and researchers have long pushed for, saying that is a better way to judge schools that serve students who start far below proficiency.

But some states are proposing measuring academic growth through a hybrid approach that combines both growth and proficiency. (That’s in addition to using proficiency metrics where they are required.) A Chalkbeat review of ESSA plans found that a number of places plan to use a hybrid metric to help decide which of their schools are struggling the most, including Arizona, Connecticut, Delaware, Louisiana, Massachusetts, and Washington D.C.

The idea has a high-profile supporter: The Education Trust, a civil rights and education group now headed by former U.S. Education Secretary John King. But a number of researchers say the approach risks unfairly penalizing high-poverty schools and maintaining some of the widely perceived flaws of No Child Left Behind.

These questions have emerged because ESSA, the new federal education law, requires states to use academic and other measures to identify 5 percent of their schools as struggling. States have the option to include “academic progress” in their accountability systems, and many are doing so.

This is a welcome trend, says Andrew Ho of Harvard, who has written a book on the different ways to measure student progress. Systems that use proficiency percentages alone, rather than accounting for growth, “are a disaster both for measurement and for usefulness,” Ho said. “They are extremely coarse and dangerously misleading.”

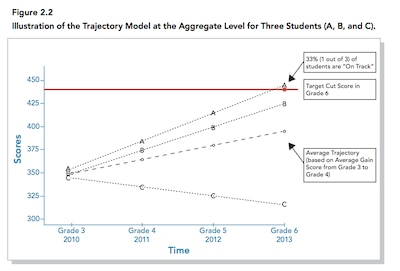

States that propose using this hybrid measure — commonly called “growth to proficiency” or “growth to standard” — have offered varying degrees of specificity in their plans about how they will calculate it. The basic idea is to measure whether students will meet or maintain proficiency within a set period of time, assuming they continue to grow at the same rate. Schools are credited for students deemed on track to meet the standard in the not-too-distant future, even if the students aren’t there yet.

This tends to rewards schools that serve students who are already near, at, or above the proficiency standard, meaning that schools with a large number of students in poverty will likely get lower scores on average.

It also worries researchers wary of re-creating systems that incentivize schools to focus on students near the proficiency bar, as opposed to those far below or above it. That phenomenon has been observed in some research on accountability systems focused on proficiency.

“As an accountability metric, growth-to-proficiency is a terrible idea for the same reason that achievement-level metrics are a bad idea — it is just about poverty,” said Cory Koedel, an economist at the University of Missouri who has studied school accountability. He has argued that policymakers should try to ensure ratings are not correlated with measures of poverty.

Researchers tend to say that the strongest basis for sorting out the best and worst schools (at least as measured by test scores) is to rely on sophisticated value-added calculations. Those models control for where students start, as well as demographic factors like poverty.

“If there are going to be high stakes — and I don’t suggest that there should be — then the more technically rigorous value-added models become the best way to approach teacher- and school-level accountability,” said Ho.

A large share of states are planning to use a value-added measure or similar approach as part of their accountability systems, in several cases alongside the growth-to-proficiency measure.

Some research has found that these complex statistical models can be an accurate gauge of how teachers and schools affect students’ test scores, though it remains the subject of significant academic debate.

But The Education Trust, which has long backed test-based accountability, is skeptical of these growth models, saying that they water down expectations for disadvantaged students and don’t measure whether students will eventually reach proficiency.

“Comparisons to peers won’t reveal whether that student will one day meet grade-level standards,” the group’s Midwest chapter stated in a report on Michigan’s ESSA state plan. “This risks setting lower expectations for students of color and low-income students, and does not incentivize schools to accelerate learning for historically underserved student groups.”

In an email Natasha Ushomirsky, EdTrust’s policy director, said the group supports measures like growth to proficiency over value-added models “because a) they do a better job of communicating expectations for raising student achievement, and b) they can be used to understand whether schools are accelerating learning for historically underserved students, and prompt them to do so.”

Of the value-added approach, Ushomirsky said, “A lower-scoring student is likely to be compared only to other lower-scoring students, while a higher-scoring student is compared to other higher-scoring students. This means that the same … score may represent very different amounts of progress for these two students.”

Marty West, a professor at Harvard, says the most prudent approach is to report proficiency data transparently, but to use value-added growth to identify struggling schools for accountability purposes.

“There are just too many unintended consequences from using [proficiency] or any hybrid approach as the basis of your performance evaluation system,” he said.

“The most obvious is making educators less interested in teaching in [high-poverty] schools because they know they have an uphill battle with respect to any accountability rating — and that’s the last thing we want.”

This story has been updated to include additional information from Education Trust.