Barack Obama’s 2012 State of the Union address reflected the heady moment in education. “We know a good teacher can increase the lifetime income of a classroom by over $250,000,” he said. “A great teacher can offer an escape from poverty to the child who dreams beyond his circumstance.”

Bad teachers were the problem; good teachers were the solution. It was a simplified binary, but the idea and the research it drew on had spurred policy changes across the country, including a spate of laws establishing new evaluation systems designed to reward top teachers and help weed out low performers.

Behind that effort was the Bill and Melinda Gates Foundation, which backed research and advocacy that ultimately shaped these changes.

It also funded the efforts themselves, specifically in several large school districts and charter networks open to changing how teachers were hired, trained, evaluated, and paid. Now, new research commissioned by the Gates Foundation finds scant evidence that those changes accomplished what they were meant to: improve teacher quality or boost student learning.

The 500-plus page report by the Rand Corporation, released Thursday, details the political and technical challenges of putting complex new systems in place and the steep cost — $575 million — of doing so.

The post-mortem will likely serve as validation to the foundation’s critics, who have long complained about Gates’ heavy influence on education policy and what they call its top-down approach.

The report also comes as the foundation has shifted its priorities away from teacher evaluation and toward other issues, including improving curriculum.

“We have taken these lessons to heart, and they are reflected in the work that we’re doing moving forward,” the Gates Foundation’s Allan Golston said in a statement.

The initiative did not lead to clear gains in student learning.

At the three districts and four California-based charter school networks that took part of the Gates initiative — Pittsburgh; Shelby County (Memphis), Tennessee; Hillsborough County, Florida; and the Alliance-College Ready, Aspire, Green Dot, and Partnerships to Uplift Communities networks — results were spotty. The trends over time didn’t look much better than similar schools in the same state.

Several years into the initiative, there was evidence that it was helping high school reading in Pittsburgh and at the charter networks, but hurting elementary and middle school math in Memphis and among the charters. In most cases there were no clear effects, good or bad. There was also no consistent pattern of results over time.

A complicating factor here is that the comparison schools may also have been changing their teacher evaluations, as the study spanned from 2010 to 2015, when many states passed laws putting in place tougher evaluations and weakening tenure.

There were also lots of other changes going on in the districts and states — like the adoption of Common Core standards, changes in state tests, the expansion of school choice — making it hard to isolate cause and effect. Studies in Chicago, Cincinnati, and Washington D.C. have found that evaluation changes had more positive effects.

Matt Kraft, a professor at Brown who has extensively studied teacher evaluation efforts, said the disappointing results in the latest research couldn’t simply be chalked up to a messy rollout.

These “districts were very well poised to have high-quality implementation,” he said. “That speaks to the actual package of reforms being limited in its potential.”

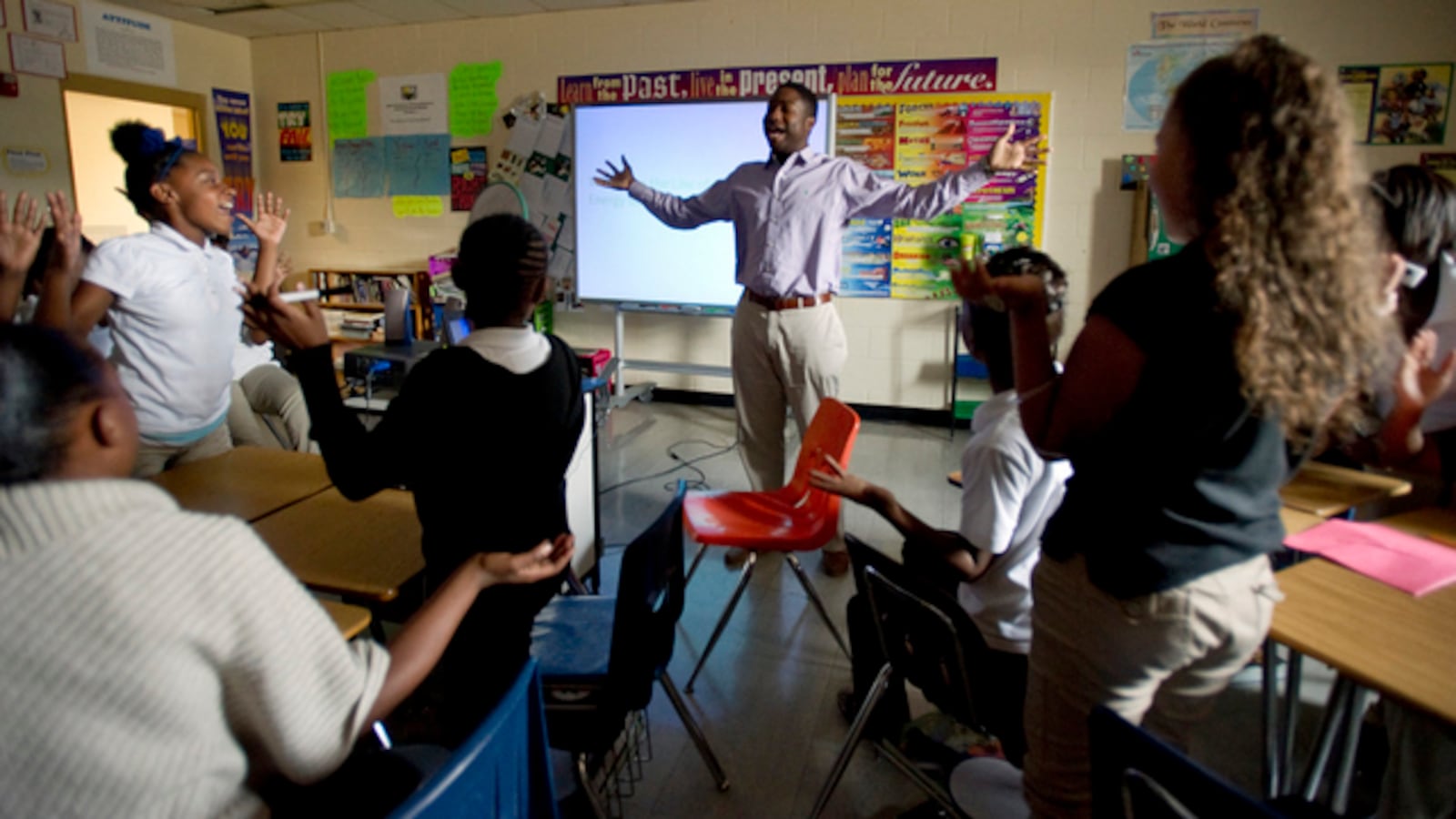

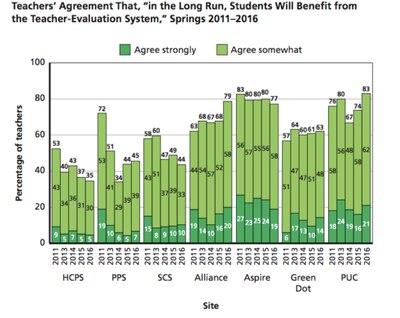

Principals were generally positive about the changes, but teachers had more complicated views.

From Pittsburgh to Tampa, Florida, the vast majority of principals agreed at least somewhat that “in the long run, students will benefit from the teacher-evaluation system.”

Teachers in district schools were far less confident.

When the initiative started, a majority of teachers in all three districts tended to agree with the sentiment. But several years later, support had dipped substantially. This may have reflected dissatisfaction with the previous system — the researchers note that “many veteran [Pittsburgh] teachers we interviewed reported that their principals had never observed them” — and growing disillusionment with the new one.

Majorities of teachers in all locations reported that they had received useful feedback from their classroom observations and changed their habits as a result.

At the same time, teachers in the three districts were highly skeptical that the evaluation system was fair — or that it made sense to attach high-stakes consequences to the results.

The initiative didn’t help ensure that poor students of color had more access to effective teachers.

Part of the impetus for evaluation reform was the idea, backed by some research, that black and Hispanic students from low-income families were more likely to have lower-quality teachers.

But the initiative didn’t seem to make a difference. In Hillsborough County, inequity expanded. (Surprisingly, before the changes began, the study found that low-income kids of color actually had similar or slightly more effective teachers than other students in Pittsburgh, Hillsborough County, and Shelby County.)

Districts put in place modest bonuses to get top teachers to switch schools, but the evaluation system itself may have been a deterrent.

“Central-office staff in [Hillsborough County] reported that teachers were reluctant to transfer to high-need schools despite the cash incentive and extra support because they believed that obtaining a good VAM score would be difficult at a high-need school,” the report says.

Evaluation was costly — both in terms of time and money.

The total direct cost of all aspects of the program, across several years in the three districts and four charter networks, was $575 million.

That amounts to between 1.5 and 6.5 percent of district or network budgets, or a few hundred dollars per student per year. Over a third of that money came from the Gates Foundation.

The study also quantifies the strain of the new evaluations on school leaders’ and teachers’ time as costing upwards of $200 per student, nearly doubling the the price tag in some districts.

Teachers tended to get high marks on the evaluation system.

Before the new evaluation systems were put in place, the vast majority of teachers got high ratings. That hasn’t changed much, according to this study, which is consistent with national research.

In Pittsburgh, in the initial two years, when evaluations had low stakes, a substantial number of teachers got low marks. That drew objections from the union.

“According to central-office staff, the district adjusted the proposed performance ranges (i.e., lowered the ranges so fewer teachers would be at risk of receiving a low rating) at least once during the negotiations to accommodate union concerns,” the report says.

Morgaen Donaldson, a professor at the University of Connecticut, said the initial buy-in followed by pushback isn’t surprising, pointing to her own research in New Haven.

To some, aspects of the initiative “might be worth endorsing at an abstract level,” she said. “But then when the rubber hit the road … people started to resist.”

More effective teachers weren’t more likely to stay teaching, but less effective teachers were more likely to leave.

The basic theory of action of evaluation changes is to get more effective teachers into the classroom and then stay there, while getting less effective ones out or helping them improve.

The Gates research found that the new initiatives didn’t get top teachers to stick around any longer. But there was some evidence that the changes made lower-rated teachers more likely to leave. Less than 1 percent of teachers were formally dismissed from the places where data was available.

After the grants ran out, districts scrapped some of the changes but kept a few others.

One key test of success for any foundation initiative is whether it is politically and financially sustainable after the external funds run out. Here, the results are mixed.

Both Pittsburgh and Hillsborough have ended high-profile aspects of their program: the merit pay system and bringing in peer evaluators, respectively.

But other aspects of the initiative have been maintained, according to the study, including the use of classroom observation rubrics, evaluations that use multiple metrics, and certain career-ladder opportunities.

Donaldson said she was surprised that the peer evaluators didn’t go over well in Hillsborough. Teachers unions have long promoted peer-based evaluation, but district officials said that a few evaluators who were rude or hostile soured many teachers on the concept.

“It just underscores that any reform relies on people — no matter how well it’s structured, no matter how well it’s designed,” she said.

Correction: A previous version of this story stated that about half of the money for the initiative came from the Gates Foundation; in fact, the foundation’s share was 37 percent or about a third of the total.